AI Adoption: From Compliance to Capability

- The increased use of artificial intelligence in higher education requires business schools to adopt pedagogical approaches that cultivate student agency, ethical judgment, and critical capability.

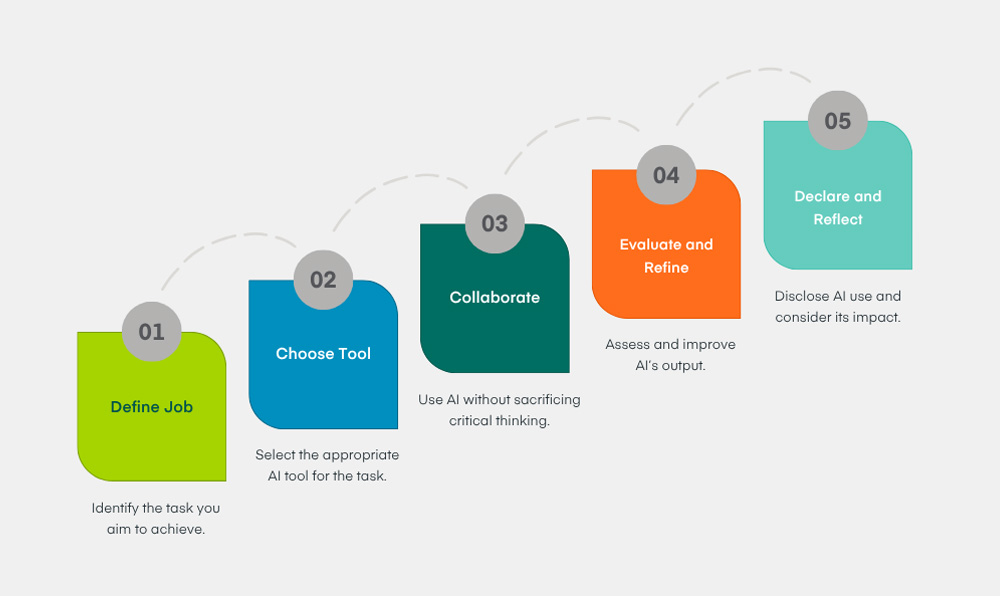

- Using a five-stage framework developed at the University of London, faculty can encourage students to collaborate with AI more intentionally, in ways that develop creative, responsible decision-making.

- When business schools integrate transparent and inclusive AI practices across their institutional cultures, they prepare graduates to lead ethically in AI-enabled workplaces.

Artificial intelligence (AI) has entered higher education at an unprecedented speed and on a massive scale. Most business schools initially responded to AI’s arrival with urgency by issuing policies, updating misconduct codes, and adopting detection software.

But now, business schools have a unique opportunity to shape the future of AI—and not only because they are natural incubators for innovation. If our graduates are to lead with integrity in AI-enabled workplaces, we must model that value in how we assess and support their learning. Moreover, AI is not just an academic tool; it is a societal force. Business schools can help ensure it is used in ways that benefit the public good.

At Royal Holloway Business School, University of London, we are reframing AI not as a threat to be controlled, but as a catalyst for capability. We have developed a five-stage approach that not only centers on student agency and ethical responsibility, but also reimagines business education for the AI age. Using this new framework as a guide, we are moving our AI strategy from compliance to capability, preparing students and faculty alike to engage with the technology critically, creatively, and confidently.

A Five-Stage Framework for Responsible Capability

Although concerns about academic misconduct and the potential for de-skilling are valid, framing the use of AI solely as a compliance issue misses the bigger picture. When students are told only what they cannot do with AI, they are left without guidance on what they can do responsibly. This creates a vacuum of uncertainty and, paradoxically, increases the likelihood of misuse.

What if we taught students how to collaborate with AI in ways that enhance their learning and deepen their ethical understanding?

Drawing on Clayton Christensen’s work on disruptive innovation and the Jobs to Be Done (JTBD) framework, our five-stage model puts the student in the driver’s seat. This model repositions the primary questions we ask about the technology: Can I use AI? What am I trying to do? How might AI help me better accomplish that goal?

When our students use AI, we guide them through the following stages:

- Define the Job to Be Done—What task are you trying to achieve?

- Choose the Right Tool—Which AI tool is fit for the purpose?

- Collaborate Critically—How will you use AI without outsourcing your thinking?

- Evaluate and Refine—What do you need to change, personalize, or verify?

- Declare and Reflect—How will you disclose AI use and reflect on its role in your work?

Integrating AI in Work—A Five-Step Framework

In the process, students build increased metacognition and agency, just two of the traits essential for lifelong learning and responsible leadership.

Case Studies: Putting AI Into Practice

We introduced this framework through three student-facing case studies to demonstrate how it works in real academic contexts:

Research Assistance: A student used AI to summarize and cluster themes from a large body of academic literature. The AI helped organize material, but the student selected, interpreted, and rewrote it in his own words.

Problem-Solving: A student in an undergraduate strategy course used AI tools to model different business scenarios and simulate outcomes. The student decided which strategies to test and analyzed the results, before connecting those results to real-world decisions.

Creative Brainstorming: A student in a marketing course used AI to generate ideas for a social-impact campaign for a sustainable fashion brand. The student filtered the AI suggestions not only through an understanding of brand strategy and the target audience, but also personal insight.

In all three cases, students retained control, reflecting on their processes and declaring their AI use transparently. The result: a more ethical, empowering, authentic use of AI in learning.

Our AI framework treats learners not as potential rule-breakers, but as responsible co-creators of knowledge who are capable of ethical decision-making

To illustrate the framework’s practical application in greater depth, here’s how the student in the Creative Brainstorming case worked through the five stages:

- Define the Job to Be Done—The student identified the job as wanting “to generate fresh, purpose-driven ideas that connect Gen Z values with the brand’s sustainability story.”

- Choose the Right Tool—The student then selected ChatGPT and Canva’s AI visual generator, not to replace critical thinking but to spark divergent ideas and imagery around tone, audience emotion, and message framing.

- Collaborate Critically—Rather than accepting outputs at face value, the student treated the AI as a creative partner, questioning and remixing responses to ensure alignment with audience insights, brand personality, and ethical storytelling.

- Evaluate and Refine—The student then compared AI-generated taglines with peer feedback and real-world campaign benchmarks, refining language and visuals to enhance authenticity and emotional resonance.

- Declare and Reflect—In the final submission, the student disclosed the role of AI, detailing what it contributed. This disclosure included what she changed and what she learned about responsible creativity.

Using AI as a collaborative partner, this student demonstrated critical judgment, digital fluency, and ethical reflexivity. No longer just a tool of convenience, AI became a catalyst for capability.

Bringing the Framework to Life

Faculty can integrate this approach into any module where students are asked to think, create, or solve problems with digital tools:

- Invite students to articulate their “job to be done” before introducing AI tools to ensure that purpose drives technology, not the reverse.

- Encourage students to document their prompts, as well as their decisions and revisions, as evidence of critical engagement. Embed short reflection checkpoints (“How did AI shape your thinking?”) to transform AI use into a metacognitive exercise rather than a shortcut.

- Finally, make transparency part of assessment design by asking students to declare AI’s contributions and evaluate the ethical implications of those contributions.

The framework is not just procedural but transformative. It helps students and educators alike use AI with curiosity, confidence, and conscience.

More important, it treats learners not as potential rule-breakers, but as responsible co-creators of knowledge who are capable of ethical decision-making. This approach frames AI use as a reflective practice in which students develop their judgment through iteration and feedback.

By incorporating the framework into our guidance, assessment briefs, and classroom practices, we give students permission to experiment and take ownership of their academic journeys.

Innovation With Accountability

As part of Royal Holloway’s wider commitment to innovation with accountability, we are addressing responsible AI use throughout our curriculum, as well as in our professional development programs and industry partnerships. We believe that AI has the potential to transform education by widening access and supporting diverse learning needs, in ways that enable all students to thrive. It can accelerate creativity and experimentation, giving learners new ways to explore ideas and express themselves.

AI also can enhance student confidence and engagement by generating personalized feedback and adaptive learning experiences. And it can do so while connecting classroom learning to real-world skills and dilemmas that prepare students for the future.

But none of these benefits matter unless we also prepare students to navigate complexity with ethical awareness. That’s why our guidance includes templates for AI attribution, examples of acceptable use, and explanations of why certain uses require declaration. In short, we are creating a culture of responsible experimentation, where AI is neither feared nor fetishized, but interrogated and improved upon by our students.

Teaching students to be accountable in their use of AI is not just about their education. It’s about their employability and societal readiness. Business graduates are entering workplaces where AI is already commonplace in human resources, marketing, logistics, and finance. Our role is to equip students not just with technical fluency, but with moral imagination and strategic judgment.

Responsible AI use is an iterative journey. By helping students define their purposes and reflect on their tools and processes, we shift the narrative from fearing AI as threat to welcoming AI an opportunity.

Encouraging reflection and peer dialogue about AI dilemmas is essential to cultivating responsible judgment, while supporting staff members so that they use AI tools creatively and pedagogically will strengthen learning experiences. In addition, when we partner with industry to establish shared standards for ethical practice, we can ensure that our graduates harness AI not merely for efficiency or profit, but in service of society and the greater good.

Responsible AI use is not a destination. It is an iterative journey that is both relational and deeply human. By helping students define their purposes and reflect on their tools and processes, we shift the narrative from fearing AI as threat to welcoming AI an opportunity.

AI and the Future of Business Education

While our framework was initially developed in a business school context, it can be applied across other faculties, from the humanities to the life sciences. By emphasizing student intentions and reflective engagement rather than discipline-specific norms alone, the framework offers a universal structure for fostering ethical and creative uses of AI in learning and assessment.

We see opportunities to integrate the framework into academic integrity training and faculty development, as well as course design processes. We want to prepare our community to use the technology as a catalyst that moves us forward as we strive to achieve several objectives:

To rethink digital literacy and academic purpose. With a shared language centered on purpose and critical evaluation, universities can shift AI conversations from focusing on anxiety and compliance to sparking curiosity and capability.

To widen access and improve learning equity. Students with disabilities, international students navigating language barriers, or first-generation students unfamiliar with academic norms can all benefit when we use AI as a scaffold for expression and confidence, as long as we do so with care.

To support social responsibility and ensure digital justice. As future business leaders, our students will grapple with AI’s biases, energy costs, and ethical and sustainability implications. Asking questions about such topics as part of students’ everyday learning, not just in specialist ethics modules and classes, ensures that the business school becomes a site not only of innovation, but of conscience.

As AI continues to evolve, so must our teaching. The business school of the future will not simply adopt AI tools but will cultivate a culture where innovation is grounded in accountability and curiosity. It will be a place where students and staff are encouraged not only to use AI, but to ask: Why? For whom? And with what impact?

No matter the future direction of higher education, promoting integrity in AI’s application will remain an essential aspect of our north star. As Christensen taught us, disruption creates opportunity. We need to make sure that it also creates responsibility.